The ethics of artifical intelligence AI

Why new technologies have to master ethical questions, too

This year we will discover that AI ethics will need to be codified and established in a realistic and pragmatic way. If we do not do this, we will endanger not only the right of self-determination of every individual, but also that of companies and our entire society.

In future, ethics enshrined in law will have to be a self-evident component of every technological progress. © depositphotos

Artificial intelligence: even too “ghastly” for the police

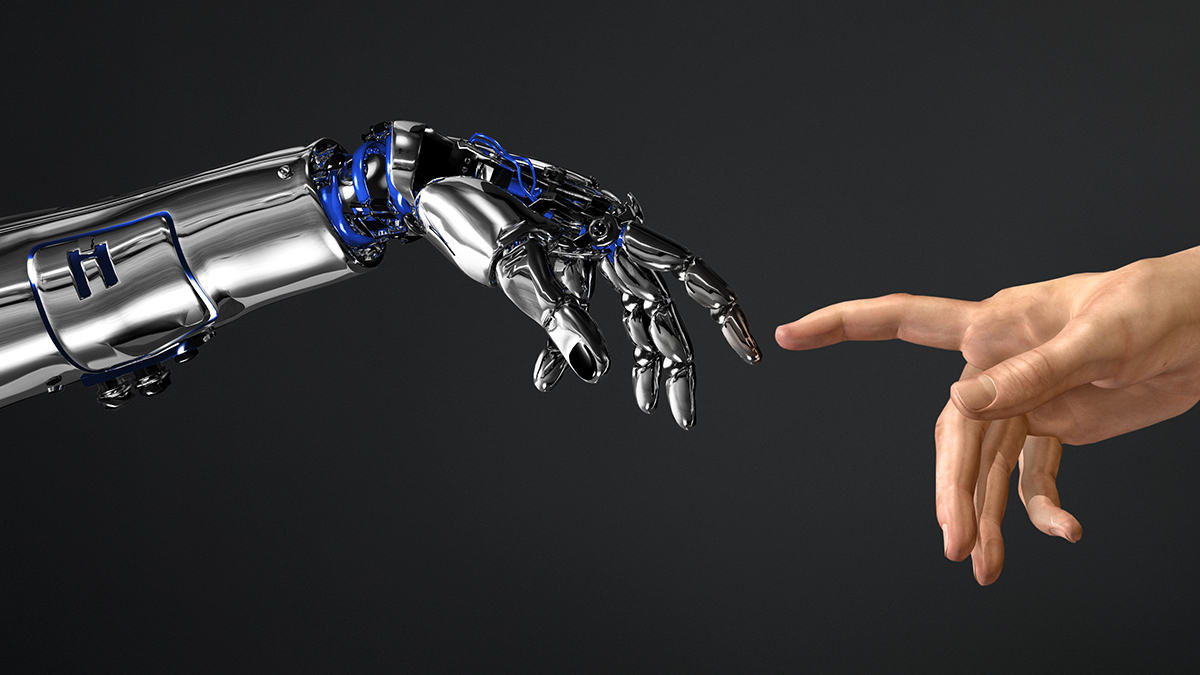

Despite being a highly experimental and often flawed technology, Artificial Intelligence (AI) is already in widespread use. It is often used when we apply for a loan or a job. It is used to police our neighbourhoods, to scan our faces to check us against watchlists when we shop and walk around in public, to sentence us when we are brought before a judge and to conduct aspects of warfare. All this is happening without a legal framework to ensure that AI use is transparent, accountable and responsible. In 2020 we will realise that this must change.

Concerns about AI are not confined to civil-liberties and human-rights activists. London Metropolitan Police Commissioner Cressida Dick has warned that the UK risks becoming a “ghastly, Orwellian, omniscient police state” and has called for law-enforcement agencies to engage with ethical dilemmas posed by AI and other technologies. Companies that make and sell facial-recognition technology, such as Microsoft, Google and Amazon, have repeatedly asked governments to pass laws governing its use – so far to little avail.

Technology is not “neutral”

Many people would argue that this debate should go even wider than AI, calling on us to embed ethics into every stage of our technology. This means asking not just “Can we build it?” but “Should we?” It means examining the sources of funding for our technology (such as Saudi Arabia, which is a big investor in Softbank’s Vision Fund). And it means recognising that the lack of diversity and inclusion in technology creates software and tools that exclude much of the population, just as there is deep and damaging bias in our datasets.

Video highlight on this subject:

Our exclusive interview with Cornelia Diethelm, founder of the Centre for Digital Responsibility (CDR)

We’re “amusing ourselves to death”, Neil Postman once predicted, describing his discomfort with the overpowering force of television. That was back in 1985. Today, once again, we are feeling apprehension toward new technologies: Will machines soon outstrip us humans? In our working lives? In our love lives?

Watch the full interview “Why digitization requires ethics” [video]

We will challenge the idea, long held by many technology enthusiasts, that technology is “neutral”, that we should allow technology companies to make money while refusing to take responsibility beyond the bare minimum of compliance with the law. Kate Crawford, co-founder of the AI Now Institute, challenged this position in her lecture to the Royal Society in 2018 when she asked, “What is neutral? The way the world is now? Do we think the world looks neutral now?” And Shoshana Zuboff, in her 2019 book Surveillance Capitalism, argued that the power of technology can be understood by the answers to three questions: “Who knows? Who decides? Who decides who decides?” Even a brief survey of the world of technology today shows clearly that that power must be contained.

Ethics as a law

In 2020, we will understand the need to codify our ideas about what would make AI – and technology as a whole – ethical. Decision-makers in government and the private sector are already exploring ethics as part of their thinking and planning for the future of work and society. Next year we will need to continue that search and embed ethical values in legislation.

What’s missing at the moment is a rigorous approach to AI ethics that is actionable, measurable and comparable across stakeholders, organisations and countries. There’s little use in asking STEM workers to take a Hippocratic oath, for example, having companies appoint a chief ethics officer or offering organisations a dizzying array of AI-ethics principles and guidelines to implement if we can’t test the efficacy of these ideas.

In the year ahead we will see the need for that rigour. Until now, AI ethics has felt like something to have a pleasant debate about in the academy. In 2020 we will realise that not taking practical steps to embed them in the way we live will have catastrophic effects.

About the author Stephanie Hare:

Stephanie Hare is a researcher and broadcaster working across technology, politics and history. Her book on technology ethics will be published this year. Her report appears here as part of our publishing partnership with Wired UK.

A period of rapid change lies ahead – driven by innovations in the field of Quantum Computing and Artificial Intelligence (AI). We at Vontobel are following this with curiosity and attention. This enables us to identify new investment opportunities at an early stage in order to benefit from in the potential of AI based investments.

I am interested in innovative investment strategies based on AI and modern technology

Who are we? How do we live today? And how will digitization change our lives? How the future will unfold is preoccupying society more than ever, with engineers, doctors, politicians – each one of us, in fact – seeking answers. This report about “Ethics in AI” has been written in publishing partnership with WIRED, one of the leading tech magazines. It is one of many contributions that shed light on the theme “digitized society” from a new, inspiring perspective. We are publishing them here as part of our series “Impact”.